Vision-based Perception for Autonomous Urban Navigation

Abstract

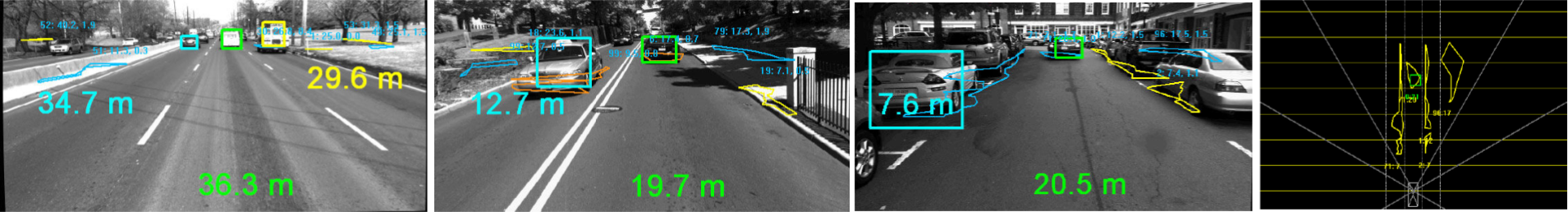

We describe a low-cost vision-based sensing and positioning system that enables intelligent vehicles of the future to autonomously drive in an urban environment with traffic. The system was built by integrating Sarnoff’s algorithms for driver awareness and vehicle safety with commercial off-theshelf hardware on a robot vehicle. We implemented a modular and parallelized software architecture that allowed us to achieve an overall sensor update rate of 12 Hz with multiple high resolution HD cameras without sacrificing robustness and infield performance. The system was field tested on the Team Autonomous Solutions vehicle, one of the top twenty teams in the 2007 DARPA Urban Challenge competition. In addition to enabling autonomy, our low-cost perception system has an intermediate advantage of providing driver awareness for convenience functions such as adaptive cruise control, lane departure sensing and forward and side-collision warning.