Ultra-wide Baseline Facade Matching for Geo-localization

Abstract

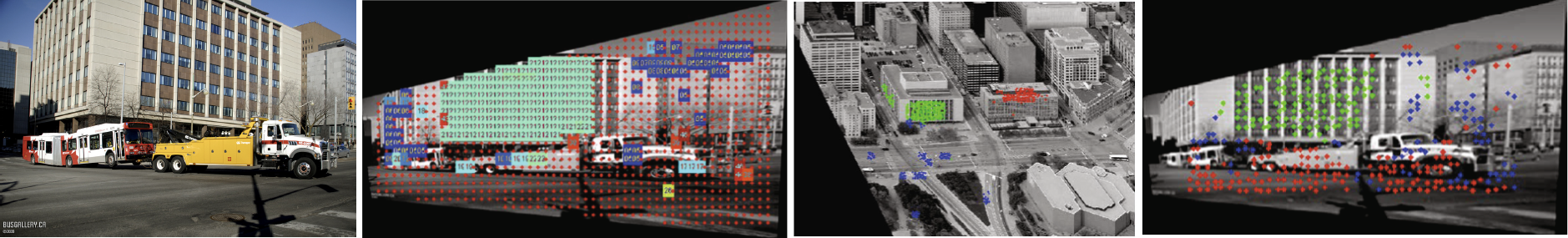

Matching street-level images to a database of airborne images is hard because of extreme viewpoint and illumination differences. Color/gradient distributions or local descriptors fail to match forcing us to rely on the structure of self-similarity of patterns on facades. We propose to capture this structure with a novel “scale-selective self-similarity” (S4) descriptor which is computed at each point on the facade at its inherent scale. To achieve this, we introduce a new method for scale selection which enables the extraction and segmentation of facades as well. Matching is done with a Bayesian classification of the street-view query S4 descriptors given all labeled descriptors in the bird’s-eye-view database. We show experimental results on retrieval accuracy on a challenging set of publicly available imagery and compare with standard SIFT-based techniques.